ChatGPT: Beautiful Liar!

I promise this article has nothing to do with Beyoncé & Shakira! Rather, it’s about how ChatGPT fabricates wrong information and describes it so beautifully that it appears more authentic than the truth. It appears that ChatGPT LLM (large language model) algorithm incorrectly overrides the learning with its interpretation and does it so repetitively that ChatGPT responses look far more erratic than Russian Roulette.

A Python developer emailed us:

Your Python APIs not working as expected …

This email was especially concerning since it came from one of our biggest corporate customers in Europe. They already deployed plenty of projects using mesibo, although using the mesibo Python APIs for the first time.

The strange part of this email was the code they shared — a piece of code having a mix of mesibo APIs that we don’t even recognize, depreciated APIs, and the latest APIs. We were puzzled by this code fusion, especially since we have very well-documented APIs and tutorials, and nowhere have we documented such a fusion code sample.

So we asked him, where he got this sample code from, and he replied,

I got it from ChatGPT

Ouch!! Didn’t expect customers to ask ChatGPT for mesibo help rather than referring to documents. But with the exploded popularity of CultGPT, this is just the beginning (there was a talk @ Stanford the last week — **“**Can ChatGPT diagnose me?)”

Frankly, we would have loved it if ChatGPT did the job, or even did it fairly, or would have declined to answer. However, the real problem was not the wrong answer, but rather how meticulously it composed the wrong answer that it feels more authentic than the correct answer, to the extent that the customer complained about mesibo API not working rather than doubting ChatGPT responses.

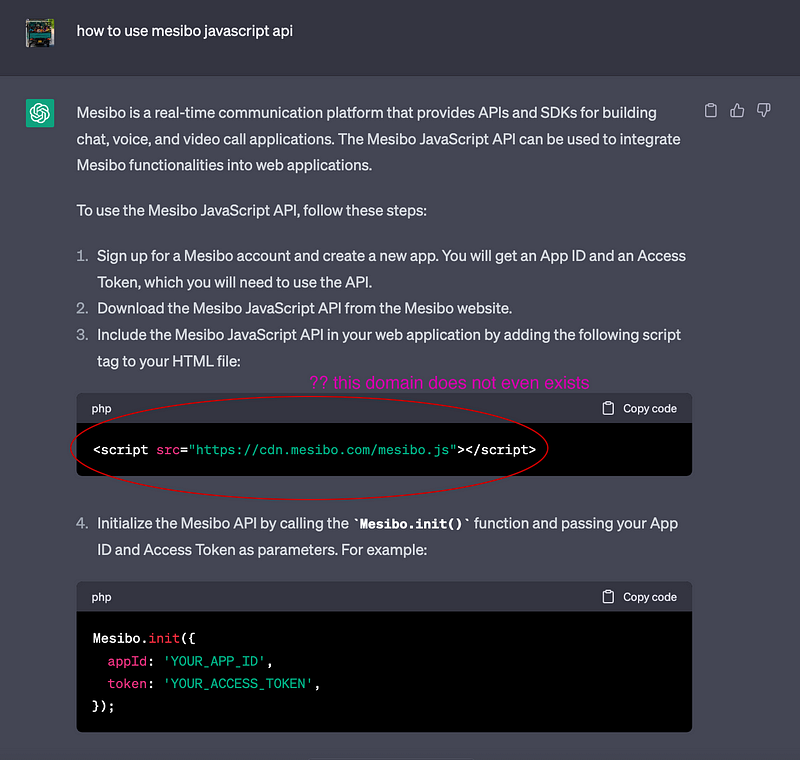

It wasn’t difficult to reproduce what our customer experienced. We learned that ChatGPT LLM (large language model) algorithm incorrectly overrides the learning with its interpretation. For example, mesibo Javascript API is documented to be hosted on api.mesibo.com. However, lots of websites host their APIs on the CDN subdomain such as cdn.example.com. The ChatGPT combined two learnings and created a fictitious domain cdn.mesibo.com which is incorrect. The answer would have been correct if the ChatGPT used its interpretation only if there was missing information in its learning dataset.

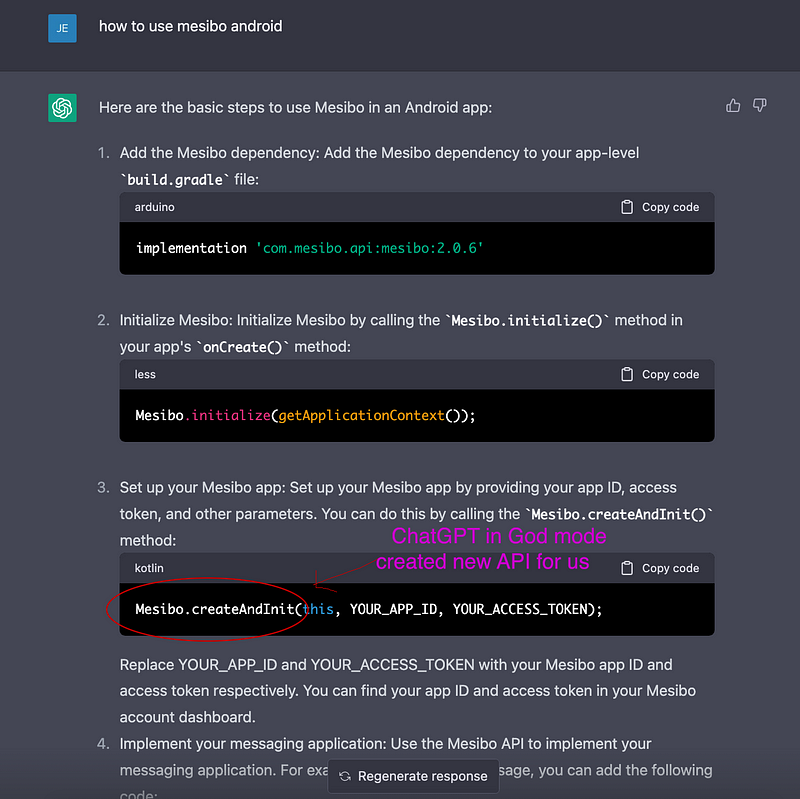

In another example, instead of using its learning from the mesibo website, it synthesized a new API and even described it — the Minority Report has some influence here :)

On a lighter note, since the ChatGPT performed quite badly in producing correct responses, those meticulously written steps sounded a bit political — nice to read but totally incorrect :))

ChatGPT is amazing but it needs to fix the Illusionary Truth effect it exhibits right now — some cautions till then.